After D211’s approval of ChatGPT Enterprise earlier this year, artificial intelligence (AI) has become a hot topic at PHS and around the country, sparking questions about its applications in the classroom and students’ data privacy.

“We have to embrace AI and teach students how to use it because otherwise, AI is going to be there, and then, if we don’t prepare our students on how to use it, there are jobs that could be lost in the future,” PHS English teacher Dana Batterton said.

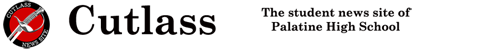

Over the summer, Batterton worked on various summer curriculum projects with the goal of incorporating AI into the classroom.

Screenshot, courtesy of Dana Batterton

“People automatically think that I’m going to use AI to just plagiarize an essay,” she said. “But there’s so many other ways you can utilize it.”

In her classroom, Batterton uses the technology to brainstorm ideas, edit writing and get students more comfortable with AI.

With this new technology, others believe that students will have to be evaluated differently.

“There’s going to be a big shift from a heavier emphasis on material evaluation than there used to be,” said D211 board member Kimberly Cavill. “Maybe you can use a large language model to help you generate prompts and ideas, but at the same time, you’re still going to have to evaluate the quality of those ideas being generated.

Cavill believes that this comes in the form of shifting instruction from teaching the smaller structure of things, which is easily outsourced to an AI large language model, to more of the evaluation and the qualitative measures that are “really our responsibility in treating people as humans.”

According to Alex Larson, the Technology Department chair at Palatine, one of the most impactful ways he believes AI will shift the way educators teach is by “individualizing how they think about student growth.”

In a classroom with 24 to 32 students, it can be very difficult to focus on every kid’s potential needs. With tools like ChatGPT, teachers can ask AI for ideas for teaching the lesson in a diversified way for each student to understand.

Larson emphasized that there would be no need to feed AI information about the students themselves for the sake of protecting their privacy.

“Professionally, [teachers] are the ones who are ultimately in the call about how they teach their content,” he said. “That informed decision-making is incredibly valuable and will never go away.”

However, with all these positive uses for the technology, many still have concerns about the data privacy of students.

Board member Cavill, who also voted against the ChatGPT Enterprise agreement earlier this year, is one of those concerned people.

“I am glad that I voted the way I did,” Cavill said. “Simply because I think the due diligence about software oversight ultimately rests on our shoulders as a board, and I’m not willing to put that in the hands of Open AI’s business leadership.”

During the board meeting on Aug. 15, Cavill’s main concern was about adding third-party software to the ChatGPT platform. She was concerned whether each new piece of software would need separate approval from the board or if a “yes” vote would cover all third-party software in general.

The administration did get back to her and said that none of those third-party integrations would come before the board for an individual vote. Although, as of now, they have no plans to utilize any of those.

Although not opposed to the technology herself, Cavill would have liked to have seen a motion where any time a third-party integration is proposed, it would have to come before the board for individual approval.

Technology Dept. Chair Alex Larson sought to clarify any confusion about third-party GPTs.

“There are lots of third-party AI companies that exist, and many of them piggyback off of ChatGPT’s back end,” Larson said. “That is the No. 1 reason why we are saying no to everything else besides ChatGPT and Firefly.”

Adobe Firefly is a generative machine learning model included as part of Adobe Creative Cloud.

“This application, we felt very strongly, was an important one for students to have an active opportunity to engage within the educational environment,” Larson said.

As a tool that will soon become a part of everyone’s life, there was a significant effort within the district and the community to get this AI technology approved. However, despite the strong push to approve the technology, there were still legal regulations that had to be considered.

SOPPA, which stands for the Student Online Personal Protection Act, is an Illinois law that protects the privacy and security of students’ online data. FERPA, the Family Educational Rights and Privacy Act, is a federal law that serves the same purpose.

These laws include privacy regulations, one of which is that student data collected by educational technology companies may not be sold.

To adhere to the laws’ guidelines, the district’s legal team went through the entire vetting process for the technology, and they came to a consensus that they could draft an agreement with ChatGPT Enterprise without any privacy violations.

“We look at this stuff very seriously, because we don’t want to have any major data leaks or major issues out there,” Larson said.

Whether students go straight to the workforce or into college after high school, “we are looking at a time frame where most people are going to have to engage with AI in some capacity,” Larson said.

Because of this, the district believes that it is essential that students and teachers are trained in the use of artificial technology to better equip them for the future.